DER model design process

To gain insights into the emotional preferences for different design styles and elements across various countries, driving the enhancement of rural cultural brand interests, this study integrates the EmoRuralSim simulator, emotion simulation technology, language simulation technology, AI technology, and text mining technology to generate a DER relational model. Together, these components construct a comprehensive system capable of accurately capturing and analyzing the relationship between user emotions and design elements. The application of the DER model in boosting rural cultural brand construction involves the organic fusion of multiple cutting-edge technologies, forming a highly sophisticated analytical system. At the core of this system lies the EmoRuralSim simulator, leveraging deep learning frameworks such as TensorFlow and PyTorch, and employing convolutional neural networks (CNNs) and recurrent neural networks (RNNs) to precisely simulate the emotions and language features of users from multiple countries. The emotion simulation technology utilizes advanced emotion analysis algorithms to generate virtual user groups from 15 countries through the simulator. These groups, by reproducing emotional curves and behavioral patterns, authentically reflect emotional responses under different cultural backgrounds. Language simulation technology, facilitated by Natural Language Processing (NLP) and semantic analysis, reconstructs user comments and interactive behaviors to ensure the authenticity and diversity of simulation results. AI technology plays a role in optimization and intelligence by constructing intelligent recommendation systems using deep learning algorithms. These systems dynamically analyze the match between geographical regions and product style keywords, thereby offering personalized design recommendations. Text mining technology conducts detailed analyses of user comment data, employing techniques such as word vectorization, topic modeling, and sentiment classification to reveal deep associations between design elements and user emotions. Furthermore, the DER model introduces time-related factors and cross-emotional impact factors into simulation equations to comprehensively capture and analyze the dynamic changes and complex interactions of user emotions. This results in an intelligent system capable of real-time capturing and analyzing user emotional responses, thereby providing robust data support and optimization recommendations for rural cultural brand design.

The development of the EmoRuralSim simulator in this study is rooted in a deep analysis and innovative integration of existing multimodal emotion computation and NLP technologies. Its aim is to construct a highly accurate and adaptable virtual simulation platform for user emotion and language behavior. The simulator is not built from scratch but rather leverages the robust computational capabilities and flexibility of current open-source deep learning frameworks like TensorFlow and PyTorch. It combines cutting-edge CNN and RNN algorithms to create a comprehensive system capable of accurately capturing and analyzing the emotional and linguistic features of users from multiple countries. In the development process, the study first conducts thorough research on existing emotion generation algorithms and selects high-accuracy and robust sentiment lexicons and semantic analysis models—specifically, the Valence Aware Dictionary and sEntiment Reasoner (VADER) sentiment analysis model and Bidirectional Encoder Representations from Transformers (BERT) embedding technology. These models provide a solid foundation for sentiment recognition and text understanding. The study then achieved precise modeling and dynamic updates of emotional states through the combination of MLP and Long Short-Term Memory (LSTM) networks. Building upon this foundation, the study employs data augmentation techniques to preprocess and expand large-scale multilingual corpora, ensuring the model’s generalizability across different languages and cultural backgrounds. The core architecture of the simulator is designed with considerations for high-concurrency processing and real-time responsiveness, optimizing computational efficiency through distributed computing and parallel processing technologies. Specifically, the study adopts a Parameter Server architecture to manage and synchronize large-scale model parameters, thereby reducing computational latency and communication overhead. Additionally, graph neural networks (GNNs) are utilized to enhance information propagation and aggregation capabilities in complex relational networks, thereby strengthening the interactive effects of emotion and language behaviors.

Experimental environment

In order to ensure the accuracy and reproducibility of the experiments, this study established specific hardware and software environments, encompassing information related to the required computational resources, operating systems, and data analysis tools for the experiments. The specific parameters are detailed in Table 1:

Table 1 Hardware and Software Environment for the experiments.

Emotion simulation is accomplished through the EmoRuralSim simulator, utilizing emotion generation algorithms. Leveraging emotion lexicons, emotional rules, and emotion models, it simulates the emotional states of users from various countries18. A more accurate emotional simulation is achieved by considering multiple emotional dimensions, such as joy, anger, sadness, and happiness, through the multidimensionality of emotion models19. The emotion simulation technology enables virtual users to express a range of emotions, primarily involving calculations as demonstrated by the following equation:

(1)

The sentiment score calculation is shown in Eq. (1):

$$\:E=\frac{\sum\:_{i=1}^{n}\:\left({w}_{i}\cdot\:{f}_{i}+\sqrt{{S}_{i}}\right)\cdot\:\text{l}\text{o}\text{g}\left(\frac{{f}_{i}}{\alpha\:+{S}_{i}}\right)\cdot\:{\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-\lambda\:t\right)dt}{\prod\:_{i=1}^{n}\:\left(1+{e}^{-(\beta\:\cdot\:{S}_{i}+\gamma\:)}\cdot\:{\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-{\eta\:}_{i}t\right)dt\right)}$$

(1)

In Eq. (1), \(\:\varvec{E}\) represents the emotion score; \(\:{\varvec{w}}_{\varvec{i}}\) is the weight of the iii-th emotional word; \(\:{\varvec{f}}_{\varvec{i}}\) is the frequency of the emotional word in the text; \(\:{\varvec{S}}_{\varvec{i}}\) is the intensity of the emotional word; n is the number of emotional words; and \(\:\varvec{\alpha\:}\), \(\:\varvec{\beta\:}\), \(\:\varvec{\gamma\:}\), \(\:\varvec{\lambda\:}\), and \(\:{\varvec{\eta\:}}_{\varvec{i}}\) are adjustment parameters.

(2)

The calculation of emotional intensity is shown in Eq. (2):

$$\:S=\frac{\sum\:_{i=1}^{n}\:\left({S}_{i}\cdot\:\text{l}\text{o}\text{g}\left(\frac{{f}_{i}+\delta\:}{\epsilon}\right)+\sqrt{{w}_{i}}\cdot\:{\left(\tau\:+{S}_{i}\right)}^{-1}\right)\cdot\:{\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-ks\right)ds}{{\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-{\kappa\:}_{i}s\right)ds\cdot\:\sum\:_{j=1}^{m}\:\left({\psi\:}_{j}\cdot\:{e}_{j}\right)}$$

(2)

In Eq. (2), \(\:\varvec{S}\) denotes emotional intensity; \(\:\varvec{\delta\:}\), \(\:\varvec{\tau\:}\), k, \(\:{\varvec{\kappa\:}}_{\varvec{i}}\), and \(\:{\varvec{\psi\:}}_{\varvec{j}}\) are adjustment parameters.

(3)

Multidimensional emotional states are represented as shown in Eq. (3):

$$\:A={\left[\frac{{e}_{1}+\mu\:}{\theta\:+{e}_{2}},\frac{{e}_{2}+\sigma\:}{\varphi\:+{e}_{3}},\dots\:,\frac{{e}_{m}+{\aleph}}{\beth\:+{e}_{m}}\right]}^{T}+{\int\:}_{-{\infty\:}}^{{\infty\:}}\:\left(\xi\:\cdot\:\text{c}\text{o}\text{s}\left(\omega\:t\right)+\zeta\:\cdot\:\text{s}\text{i}\text{n}\left(\omega\:t\right)\right)dt$$

(3)

Equation (3) describes \(\:\varvec{A}\) as an m-dimensional vector where each dimension \(\:{\varvec{e}}_{\varvec{i}}\) represents the score of different emotional dimensions. \(\:\varvec{\mu\:}\), \(\:\varvec{\theta\:}\), \(\:\varvec{\sigma\:}\), \(\:\varvec{\varphi\:}\), \(\:\mathbf{\aleph\:}\), \(\:\mathbf{\beth\:}\), \(\:\varvec{\zeta\:}\), \(\:\varvec{\xi\:}\) and \(\:\varvec{\omega\:}\) are adjustment parameters.

(4)

Emotional weight calculation is represented as shown in Eq. (4):

$$\:{w}_{i}=\frac{\prod\:_{j=1}^{m}\:\left(1+{e}^{-\delta\:\cdot\:{S}_{i}\cdot\:{e}_{j}}\right)\cdot\:\left({\epsilon}_{y}+\sum\:_{k=1}^{n}\:\sqrt{{f}_{k}}\cdot\:{\int\:}_{-{\infty\:}}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-\rho\:s\right)ds\right)\cdot\:\left({\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-{\lambda\:}_{i}t\right)dt\right)}{{\int\:}_{-{\infty\:}}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-\rho\:s\right)ds\cdot\:\left({\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-{\eta\:}_{j}t\right)dt\right)}$$

(4)

In Eq. (4), \(\:{\varvec{e}}_{\varvec{j}}\) represents the score of emotional dimension j; \(\:{\varvec{f}}_{\varvec{k}}\) denotes the frequency of emotional word k; \(\:\varvec{\delta\:}\), \(\epsilon_y\), and \(\:\varvec{\rho\:}\) are adjustment parameters.

In this study, emotional simulation technology accurately captures and simulates user emotional states through the implementation of the four core formulas described above. Equation (1) comprehensively quantifies emotional scores by integrating emotional word weights, frequencies, intensities, adjustment parameters, and integral terms. Equation (2) uses logarithmic transformations and integrals of emotional word frequencies and intensities to generate more refined estimates of emotional intensity. Equation (3) depicts the dynamic changes in emotional states through multidimensional vectors, adjustment parameters, and integrals of cosine and sine functions. Finally, Eq. (4) optimizes the distribution of emotional word weights by combining emotional dimension scores, emotional word frequencies, and complex integral terms. Together, these equations ensure that emotional simulation technology dynamically and accurately reflects the complexity and variability of user emotions.

Language simulation is realized through the EmoRuralSim simulator, utilizing natural language generation algorithms. Training on a substantial corpus of actual language data to learn language structures and patterns generates language simulation data for virtual users20. The DER model takes emotional states as input and generates language text aligned with the emotional context, achieving the fusion of emotion and language21. This involves the following key Equation calculations:

(1)

The language generation probability is calculated as shown in Eq. (5):

$$\:P\left({W}_{e}\right|{Q}_{g})=\prod\:_{t=1}^{T}\:\left(P\left({W}_{t}\right|{\left\{{W}_{p}\right\}}_{1}^{t-1},{Q}_{g},{S}_{x})\cdot\:{\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-\lambda\:t\right)dt\right)$$

(5)

In Eq. (5), \(\:\varvec{P}\left({\varvec{W}}_{\varvec{e}}\right|{\varvec{Q}}_{\varvec{g}})\) represents the probability of generating opinion text \(\:{\varvec{W}}_{\varvec{e}}\) given context \(\:{\varvec{Q}}_{\varvec{g}}\); \(\:{\varvec{S}}_{\varvec{x}}\) denotes the initial emotional state; T represents the length of the sequence; \(\:{\varvec{W}}_{\varvec{t}}\) represents the t-th element in the sequence; \(\:{\varvec{W}}_{\varvec{p}}\) represents the preceding \(\:\varvec{t}-1\) elements in the sequence.

(2)

The calculation of emotional intensity is shown in Eq. (6):

$$\:{Q}_{g}=(W\cdot\:E\cdot\:U\cdot\:{S}_{x}+b)\cdot\:\left(\sum\:_{k=1}^{n}\:\frac{\sqrt{{S}_{k}}}{\alpha\:+{S}_{k}}\cdot\:{\int\:}_{0}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-{\eta\:}_{k}t\right)dt\right)$$

(6)

Equation (6): \(\:\varvec{W}\) represents different texts; \(\:\varvec{E}\) denotes the emotion embedding matrix; \(\:\varvec{U}\) represents the weight matrix; \(\:\varvec{b}\) denotes the bias vector; \(\:{\varvec{S}}_{\varvec{k}}\) represents the intensity of emotion words; \(\:\varvec{\alpha\:}\) and \(\:{\varvec{\eta\:}}_{\varvec{k}}\) are adjustment parameters.

(3)

Multidimensional emotional states are represented as shown in Eq. (7):

$$\:L=\frac{1}{N}\sum\:_{i=1}^{N}\:\sum\:_{t=1}^{{T}_{i}}\:\text{l}\text{o}\text{g}P\left(\{{W}_{t}{\}}_{i}\mid\:\{{W}_{p}{\}}_{1}^{t-1},\{{Q}_{g}{\}}_{i},\{{S}_{x}{\}}_{i}\right)\cdot\:{\int\:}_{-{\infty\:}}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-\rho\:s\right)ds$$

(7)

In Eq. (7), \(\:\varvec{L}\) represents the loss function, used to measure the gap between generated opinion texts and actual opinion texts; \(\:\varvec{N}\) denotes the number of training samples; i represents the sample index; \(\:{\varvec{T}}_{\varvec{i}}\) represents the length of the sequence for the i-th sample.

(4)

Calculation of emotional weights is shown in Eq. (8):

$$\:{W}_{t}\sim\:P\left({W}_{t}|{\left\{{W}_{p}\right\}}_{1}^{t-1},{Q}_{g},{S}_{x}\right)\cdot\:\left(\sum\:_{j=1}^{m}\:\frac{{e}^{-\delta\:{S}_{i}{e}_{j}}}{1+{e}^{-\gamma\:{e}_{j}}}\cdot\:{\int\:}_{-{\infty\:}}^{{\infty\:}}\:\text{e}\text{x}\text{p}\left(-\zeta\:t\right)dt\right)$$

(8)

Equation (8): \(\:{\varvec{e}}_{\varvec{j}}\) represents the score of emotional dimension \(\:\varvec{j}\).

Equation (5) calculates the probability of generating text by integrating emotional initial state, context, and preceding elements. Equation (6) combines text, emotion embedding matrix, weight matrix, and bias vector to estimate emotional intensity. Equation (7) evaluates the discrepancy between generated text and actual text using complex logarithmic probabilities and integration operations. Equation (8) optimizes the distribution of emotional word weights by combining emotional dimension scores and complex integrations, enabling emotion simulation technology to dynamically and accurately reflect the complexity and variability of user emotions, achieving deep integration of emotion and language.

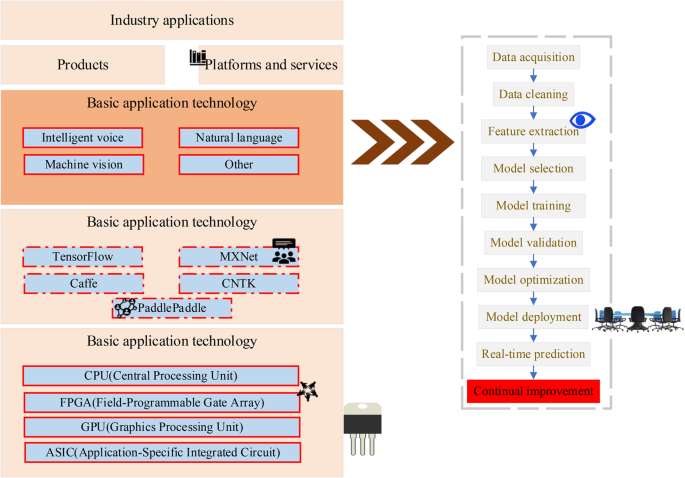

AI technology is a technique that emulates human intelligent behavior, wherein computer systems replicate human thinking and decision-making processes22. Its fundamental principles encompass machine learning, deep learning, and data mining. By enabling computer systems to learn patterns and rules from data, AI technology facilitates autonomous decision-making and predictions, simulating human intelligent behavior23,24. The architecture of AI technology is illustrated in Fig. 1:

Fig. 1

The architecture of AI technology.

Mathematical formulas become crucial tools in the in-depth exploration of the integration between AI technology and rural cultural branding25. These formulas represent the fundamental principles of machine learning and deep learning, enabling key aspects such as emotion simulation and language generation through the learning of data patterns and decision simulation26. Elements within the formulas, such as weights and loss functions, are pivotal in connecting emotion-driven recommendations and achieving emotional resonance.

(1)

Linear regression calculation is presented as shown in Eq. (9):

$$\:y={\beta\:}_{0}+{\beta\:}_{1}{x}_{1}+{\beta\:}_{2}{x}_{2}+\dots\:+{\beta\:}_{v}{x}_{v}+\epsilon\:$$

(9)

In Eq. (9), \(\:\varvec{y}\) represents the predicted output. \(\:{\varvec{x}}_{1}\), \(\:{\varvec{x}}_{2}\), …, \(\:{\varvec{x}}_{\varvec{v}}\) denote the v-th input features. \(\:{\varvec{\beta\:}}_{0}\), \(\:{\varvec{\beta\:}}_{1}\), \(\:{\varvec{\beta\:}}_{2}\), …, \(\:{\varvec{\beta\:}}_{\varvec{v}}\) signify the weights; ε represents the error term.

(2)

Logistic regression is illustrated in Eq. (10):

$$\:P(Y=1|x)=\frac{1}{1+{e}^{-({w}_{0}+{w}_{1}{x}_{1}+{w}_{2}{x}_{2}+\dots\:+{w}_{m}{x}_{m})}}$$

(10)

In Eq. (10), \(\:\varvec{P}(\varvec{Y}=1|\varvec{x})\) represents the probability of predicting category 1. \(\:{\varvec{w}}_{0}\), \(\:{\varvec{w}}_{1}\), \(\:{\varvec{w}}_{2}\), …, \(\:{\varvec{w}}_{\varvec{m}}\) denote the weight coefficients.

(3)

The computation of multilayer perceptron (MLP) is shown in Eqs. (11) and (12):

$$\:{Z}^{(l+1)}={W}^{(l+1)}{a}^{\left(l\right)}+{b}^{(l+1)}$$

(11)

$$\:{a}^{(l+1)}=\sigma\:\left({Z}^{(l+1)}\right)$$

(12)

In Eqs. (11) and (12), \(\:{\varvec{Z}}^{(\varvec{l}+1)}\) represents the weighted sum of the \(\:\varvec{l}+1\)-th layer. \(\:{\varvec{W}}^{(\varvec{l}+1)}\) stands for the weight matrix. \(\:{\varvec{b}}^{(\varvec{l}+1)}\) denotes the bias vector. \(\:{\varvec{a}}^{(\varvec{l}+1)}\) and \(\:{\varvec{a}}^{\left(\varvec{l}\right)}\) respectively denote the output of the activation function in the \(\:\varvec{l}+1\)-th and \(\:\varvec{l}\)-th layers. \(\:\varvec{\sigma\:}\) represents the activation function.

(4)

Calculation of the cross-entropy loss function is presented as shown in Eq. (13):

$$\:L\left(y,\widehat{y}\right)=-\sum\:_{i=1}^{N}({y}_{i}\text{log}\left({\widehat{y}}_{i}\right)+\left(1-{y}_{i}\right)\text{log}\left(1-{\widehat{y}}_{i}\right))$$

(13)

In Eq. (13), \(\:\varvec{L}\left(\varvec{y},\widehat{\varvec{y}}\right)\) represents the cross-entropy loss. \(\:{\varvec{y}}_{\varvec{i}}\) denotes the actual output. \(\:\widehat{\varvec{y}}\) signifies the model’s prediction. N represents the number of samples.

(5)

Weight updates in the backpropagation algorithm are illustrated in Eq. (14):

$$\:{W}^{(l+1)}={W}^{(l+1)}-\propto\:\frac{\partial\:L}{\partial\:{W}^{(l+1)}}$$

(14)

In Eq. (14), \(\:\propto\:\) denotes the learning rate. \(\:\frac{\partial\:\varvec{L}}{\partial\:{\varvec{W}}^{(\varvec{l}+1)}}\) represents the gradient of the loss function with respect to the weight.

(6)

Calculation of distances in the K-means clustering algorithm is expressed in Eq. (15):

$$\:D(x,{c}_{i})=\sqrt{{{\sum\:}_{j=1}^{n}({x}_{j}-{c}_{ij})}^{2}}$$

(15)

In Eq. (15), \(\:\varvec{D}(\varvec{x},{\varvec{c}}_{\varvec{i}})\) signifies the distance between sample point x and the i-th cluster center \(\:{\varvec{c}}_{\varvec{i}}\). n represents the number of features. \(\:{\varvec{x}}_{\varvec{j}}\) represents the j-th feature value of sample point x. \(\:{\varvec{c}}_{\varvec{i}\varvec{j}}\) represents the j-th feature value of the i-th cluster center \(\:{\varvec{c}}_{\varvec{i}}\)27.

Text mining technology is a method that utilizes computers to process and analyze large amounts of textual data to extract useful information and patterns. Its fundamental principles encompass text preprocessing, feature extraction, and model building28,29. By processing textual data, extracting crucial features, and constructing models, it unveils patterns, sentiments, and information within the text30. The architecture of text mining technology is depicted in Fig. 2:

Fig. 2

Architecture of Text Mining Technology.

In sentiment analysis within text mining, processes such as term frequency-inverse document frequency, cosine similarity, and the naive Bayes classifier are employed to calculate sentiment polarity31. By quantifying emotions using mathematical formulas, it becomes possible to delve deeper into information embedded in textual data, facilitating more precise emotion simulation and language generation32.

(1)

Calculation of term frequency-inverse document frequency is presented as shown in Eq. (16):

$$\:TFIDF\left(t,d\right)=TF\left(t,d\right)*log\frac{N}{DF\left(t\right)}$$

(16)

In Eq. (16), \(\:\varvec{T}\varvec{F}\left(\varvec{t},\varvec{d}\right)\) represents the term frequency of vocabulary t in document d. N signifies the total number of documents.

(2)

Calculation of cosine similarity is illustrated in Eq. (17):

$$\:Cosinesimilarity\left(A,B\right)=\frac{A*B}{{\left|\left|A\right|\right|}_{2}{\left|\left|B\right|\right|}_{2}}=\frac{{\sum\:}_{i=1}^{n}{A}_{i}*{B}_{i}}{\sqrt{{\sum\:}_{i=1}^{n}{{A}_{i}}^{2}}*\sqrt{{\sum\:}_{i=1}^{n}{{B}_{i}}^{2}}}$$

(17)

In Eq. (17), A and B respectively represent different vectors. n denotes the number of vocabulary terms in the text.

(3)

Computation of the naive Bayes classifier in text classification is expressed in Eq. (18):

$$\:P\left({C}_{k}\right|X)=\frac{P\left(X|{C}_{k}\right)}{P\left(X\right)}*P({C}_{k})$$

(18)

In Eq. (18), \(\:\varvec{P}\left({\varvec{C}}_{\varvec{k}}\right|\varvec{X})\) represents the posterior probability that given text X belongs to the category \(\:{\varvec{C}}_{\varvec{k}}\). \(\:\varvec{P}\left(\varvec{X}\right|{\varvec{C}}_{\varvec{k}})\) signifies the conditional probability of the text under the category \(\:{\varvec{C}}_{\varvec{k}}\). \(\:\varvec{P}\left(\varvec{X}\right)\) represents the marginal probability of the text.

(4)

Computation of the latent Dirichlet allocation in topic modeling is illustrated in Eq. (19):

$$\:P\left(w|\alpha\:,\beta\:\right)=\frac{{\Gamma\:}\left(\sum\:_{i=1}^{K}{\alpha\:}_{i}\right)}{{\prod\:}_{i=1}^{K}{\Gamma\:}\left({\alpha\:}_{i}\right)}*{\prod\:}_{i=1}^{K}\frac{{\Gamma\:}({n}_{i}+{\alpha\:}_{i})}{{\Gamma\:}\left({n}_{i}\right)}*{\prod\:}_{j=1}^{V}\frac{{\Gamma\:}({m}_{ij}+{\beta\:}_{j})}{{\Gamma\:}\left({\sum\:}_{j=1}^{V}{\beta\:}_{j}\right)}$$

(19)

In Eq. (19), \(\:\varvec{\alpha\:}\) and \(\:\varvec{\beta\:}\) represent hyperparameters. \(\:\varvec{K}\) denotes the number of topics. \(\:{\varvec{n}}_{\varvec{i}}\) signifies the number of vocabulary terms belonging to topic i in the document. \(\:{\varvec{m}}_{\varvec{i}\varvec{j}}\) represents the count of vocabulary j under topic i.

(5)

Calculation of sentiment polarity in text mining analysis is presented as shown in Eq. (20):

$$\:Sentimentpolarity\left(t\right)=\frac{{\sum\:}_{i=1}^{n}SentimentScore\left({w}_{i}\right)}{n}$$

(20)

In Eq. (20), \(\:{\varvec{w}}_{\varvec{i}}\) represents vocabulary i. \(\:\varvec{S}\varvec{e}\varvec{n}\varvec{t}\varvec{i}\varvec{m}\varvec{e}\varvec{n}\varvec{t}\varvec{p}\varvec{o}\varvec{l}\varvec{a}\varvec{r}\varvec{i}\varvec{t}\varvec{y}\left(\varvec{t}\right)\) signifies the sentiment polarity of text t. \(\:\varvec{S}\varvec{e}\varvec{n}\varvec{t}\varvec{i}\varvec{m}\varvec{e}\varvec{n}\varvec{t}\varvec{S}\varvec{c}\varvec{o}\varvec{r}\varvec{e}\left({\varvec{w}}_{\varvec{i}}\right)\) represents the sentiment score of vocabulary \(\:{\varvec{w}}_{\varvec{i}}\)33.